It is desirable to guard against the possibility of exaggerated ideas that might arise as to the powers of the Analytical Engine. In considering any new subject, there is frequently a tendency, first, to overrate what we find to be already interesting or remarkable; and, secondly, by a sort of natural reaction, to undervalue the true state of the case, when we do discover that our notions have surpassed those that were really tenable. The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform. It can follow analysis; but it has no power of anticipating any analytical relations or truths. Its province is to assist us in making available what we are already acquainted with.

—Augusta Ada King, the Countess of Lovelace, 1843

The first words uttered on a controversial subject can rarely be taken as the last, but this comment by British mathematician Lady Lovelace, who died in 1852, is just that—the basis of our understanding of what computers are and can be, including the notion that they might come to acquire artificial intelligence, which here means “strong AI,” or the ability to think in the fullest sense of the word. Her words demand and repay close reading: the computer “can do whatever we know how to order it to perform.” This means both that it can do only what we know how to instruct it to do, and that it can do all that we know how to instruct it to do. The “only” part is what most earlier writings on the subject have been concerned with, but the “all” part is just as important—it explains (for those open to explanation) why the computer keeps performing feats that seem to show that it’s thinking, while critics, like me, continue to insist that it is doing no such thing.

The amazing feats achieved by computers demonstrate our progress in coming up with algorithms that make the computer do valuable things for us. The computer itself, though, does nothing more than it ever did, which is to do whatever we know how to order it to do—and we order it to do things by issuing instructions in the form of elementary operations on bits, the 1s and 0s that make up computer code. The natural-language instructions offered by higher-order programming systems seem to suggest that computers understand human language. Such a delusion is common among those who don’t know that such higher-level instructions must first be translated into the computer’s built-in machine language, which offers only operations on groups of bits, before the computer can “understand” and execute them. If you can read Dante only in English translation, you cannot be said to understand Italian.

Adding to the confusion surrounding claims of AI is the lack of agreement on what technical developments deserve the name. In practice, new software created by an institution with “AI” in its title is often credited with being AI when it first appears—but these supposed breakthroughs are regularly demoted to plain old applications when their novelty wears off. “It’s a crazy position to be in,” laments Martha Pollack, a former professor at the Artificial Intelligence Laboratory at the University of Michigan, executive editor of the Journal of Artificial Intelligence Research, and since 2017, the president of Cornell University. “As soon as we solve a problem, instead of looking at the solution as AI, we come to view it as just another computer system,” she told Wired. One sympathizes with Pollack, who is no doubt familiar with the last of science-fiction writer Arthur C. Clarke’s three laws about the future: “Any sufficiently advanced technology is indistinguishable from magic.” But she may not have appreciated that “is indistinguishable from” is a symmetric relation, just as readily yielding Halpern’s Corollary: “Any sufficiently explained magic is indistinguishable from technology.”

PART I: Only what we know how to order it to perform

The computer can’t think, and its value lies precisely in that disability: it lets us think and preserves our thoughts in executable form. It has so often been touted as the first machine that does think, or at least will think someday, that this assertion may seem paradoxical or perverse, but it is in fact merely unconventional. The computer is a greatly misunderstood device, and the misunderstanding matters greatly.

Computers routinely carry out tasks without error or complaint, and they are very fast. Speed has the power to overawe and mislead us. We all know, for example, that movies are merely sequences of still images projected onto the screen so rapidly that the brain’s visual cortex transforms discrete images into continuous action. But knowing this does not prevent the trick from working. Likewise, the speed with which a computer achieves its effects supports the notion that it’s thinking. If we were to slow it down enough to follow it step by step, we might soon conclude that it was the stupidest device ever invented. That conclusion, however, would be just as invalid: the computer is neither intelligent nor stupid, any more than a hammer is.

Then there is our tendency to personify any device, process, or phenomenon with which we come into contact: our houses, cars, ships, hurricanes, nature itself. We invest with personality and purpose just about everything we encounter. The computer makes us particularly susceptible to such thinking, in that its output is often symbolic, even verbal, making it seem especially human. When we encounter computer output that looks like what we produce by thinking, we are liable to credit the computer with thought, on the fallacious grounds that if we are accustomed to finding some good thing in a particular place, it must originate in that place. By that rule of inference, there would have to be an orchestra somewhere inside your CD player and a farm in your refrigerator. Urban children are understandably likely to suppose that food originates at the supermarket; AI enthusiasts are less understandably prone to thinking that intelligent activity originates within the computer.

Computers likewise benefit from our preference for the exciting positive rather than the dreary negative. If it were widely understood that the computer isn’t thinking, how could journalists continue to produce pieces with headlines like “Will Robots Take Over the World?” and “AI Is the Next Phase of Human Evolution,” and all the other familiar scare stories that editors commission? These stories implicitly attribute not just intellect to the computer but also volition—the computer, if we are to get alarmed, must want to conquer us or replace us or do something equally dramatic. The reading public acts as a co-conspirator. Most of us would prefer a sensational, scary-but-not-too-scary story about how machines are threatening or encroaching on us over a merely factual account of what’s going on. There aren’t any man-eating alligators in the sewers.

With regard to computers, though, the sober truth is more interesting than the sensational falsehoods. We tend to credit machines with thinking just when they are displaying characteristics most unlike those of thinking beings: the computer never gets bored, has perfect recall, executes algorithms faultlessly and at great speed, never rebels, never initiates any new action, and is quite content with endlessly repetitive tasks.

Is such an entity thinking—that is, doing what human minds do?

A prime example of our tendency to believe in thinking computers comes from the game of chess. When IBM’s Deep Blue beat Garry Kasparov, many AI champions were ready to write QED. After all, isn’t chess-playing ability one of the hallmarks of intellectual power? And if a computer can beat the world champion, mustn’t we conclude that it has not merely a mind but one of towering strength? In fact, the computer does not play chess at all, let alone championship chess. Chess is a game that has evolved over centuries to pose a tough but not utterly discouraging challenge to humans, with regard to specifically human strengths and weaknesses. One human capacity it challenges is the ability to concentrate; another is memory; a third is what chess players call sitzfleisch—the ability to resist the fatigue of sitting still for hours. The computer knows nothing of any of these. Finally, chess prowess depends on players’ ability to recognize general situations that are in some sense “like” ones they’ve seen before, either over the board or in books. Again, the computer largely sidestepped what is most significant for humans, and hence for the game, by analyzing every position from scratch, and relying on speed to make up for its weakness at gestalt pattern recognition.

Perhaps most effective, though, in making it seem that the computer can think is our occasional surprise at what a computer does—a surprise that puts us in a vulnerable condition, easy pickings for AI enthusiasts: “So, you didn’t think the computer could do such-and-such! Now that you see it can, you have to admit that computers can think!”

But surprise endlessly generates paradoxes and problems. It names a sensation we cannot even be sure we’ve experienced. What we once took for a surprise may now seem no such thing. Consider this example: a stage magician announces that he will make an elephant disappear and ushers it behind a curtain. He waves his wand, draws the curtain to show that the elephant has disappeared, and we are duly surprised. What if he had drawn the curtain only to reveal Jumbo still placidly standing there? What would we have experienced? Why, a real surprise. And if, while we are laughing at the magician’s chagrin, the elephant suddenly vanishes like a pricked bubble, what would we call our feelings at that? Surprise again.

In his influential 1950 essay “Computing Machinery and Intelligence,” British computer scientist Alan Turing rejects Lady Lovelace’s characterization of the computer, and, for many AI champions, this disposes of her. But Turing not only fails to refute her, he also resorts to the shabby suggestion that her omission of only from “It can do whatever we know how to order it to perform” leaves open the possibility that she did not mean utterly to deny computer originality—shabby because he knew perfectly well that she meant only, as he inadvertently admits when, elsewhere in the same paper, he paraphrases her statement as “the machine can only do what we tell it to.”

When members of the AI community need some illustrious forebear to lend dignity to their position, they often invoke Turing and his paper as holy writ. But when critics ask why the Turing test—a real-world demonstration of artificial intelligence equal to that of a human—has yet to be successfully performed, his acolytes brush him aside as an early and rather unsophisticated enthusiast. Turing’s ideas, we are told, are no longer the foundation of AI work, and we are tacitly invited to relegate the paper to the shelf where unread classics gather dust, even while according its author the profoundest respect. The relationship of the AI community to Turing is much like that of adolescents to their parents: abject dependence accompanied by embarrassed repudiation.

PART II: All the things we know how to order it to perform

The place to begin to understand the computer, and to appreciate the value of its inability to think, is with the algorithm. An algorithm is a complete, closed procedure for accomplishing some well-defined task. It consists of a finite number of simple steps, where “simple” means within the powers of anyone of ordinary ability and education. We have all executed algorithms, at least arithmetic ones—long division or multiplication of one multidigit number by another, for example. All algorithms are, in principle, executable by humans, but there is a host of potentially valuable algorithms that we cannot possibly carry out unaided because they consist of millions or billions of steps. The computer, however, is perfectly suited to the task. Because it is fitted to do this, we begin, paradoxically, to hear claims that the computer is thinking. Yet the computer never behaves mysteriously, never appears to have volition. In short, it seems to be thinking precisely because, being unable to “think for itself,” it’s the perfect vehicle for and executor of preserved human thought—of thought captured and “canned” in the form of programmed algorithms. And we must not be so thunderstruck at our success in building a perfectly obedient servant that we take it for our peer, or even our superior. Jeeves is marvelous but is, after all, a servant; Bertie Wooster is master.

We ought to clear the computer out of the way, once and for all, in this and every discussion of AI, since it has no necessary part in them. The computer merely executes a program. But even the program is extraneous to the fundamental issue—it is itself only one of an indefinite number of representations of the underlying algorithm, just as there are an indefinite number of sentences that can express a given proposition. The role of the computer is purely economic: it is the first machine that can execute lengthy algorithms quickly and cheaply enough to make their development economically attractive. Yet it is the algorithm that is ultimately the subject of contention.

PART III: The specter of autonomy

Many people suppose that computers do not merely think but do so independently, that we may soon lose control over them as they become “autonomous” and begin doing things we don’t want them to do. This is especially frightening when the computer is a military robot armed with weapons that can kill humans.

In late 2007, articles and books about the ethical implications of military robots appeared with such frequency that one could hardly open any serious journal without coming across another warning of the terrible problems they posed. That November, the Armed Forces Journal published several articles devoted to the subject, placing special emphasis on how to control robot weapons now that they seemed to be on the verge of acting autonomously. The November 16 issue of Science also focused on the ethical issues robots seemed to raise. In 2013, a new wave of such articles appeared as robots began to be called “drones,” suggesting they are something new. The fears these pieces raise about robots “taking over” or “getting away from us” are baseless, but there is indeed something frightening in them: they show that there are some widespread misconceptions about robots, and these misconceptions are going to cause serious problems for us unless corrected very soon.

Two great sources of confusion typically feature in discussions of robots and their supposed ethical issues. The first is that we seem to have a rich literature to draw upon for guidance: science fiction. Whole libraries of books and magazines, going back at least as far as Mary Shelley’s Frankenstein, deal with the creation of beings or the invention of machines that we cannot control. It would not be surprising if literary artists turned to this literature for insight. What is surprising—and alarming—is that scientists and military officers are doing the same thing, under the false impression that science fiction sheds light, however fancifully, on the issues we are now called upon to face.

The acknowledged dean of modern imaginative literature on robots was Isaac Asimov (1920–1992). For over half a century, the astonishingly prolific Asimov wrote science-fiction stories and novels about future civilizations in which humanoid robots play an important role. He made himself so much the proprietor of this subgenre of literature that many of the conventions about man-robot relationships first introduced in his writings have been adopted by other writers. In particular, his “three laws of robotics,” first announced in one of the earliest of his robot stories, are now virtually in the public domain. These laws, wired unalterably into the “positronic brains” that are the seat of his robots’ intelligence, are as follows:

1.A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given to it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The difficulty of applying the three laws in real life, let alone of embodying them in electronic circuitry, is ignored; they are simply literary conventions, allowing the author to plot his stories. Asimov’s robots face problems only at the margins, such as when they have to interpret the laws in tricky cases—but because they can think, and wish humans well, they regularly overcome these difficulties.

Unfortunately, the Asimovian robot, thinking and making decisions, is what almost everyone envisions when the topic of robots and how to control them comes up. We are asked to deal with certain questions in this context: Do we want a computer or a human being to decide whether to kill someone? Who gets the blame if a robot does something bad? What rights might a robot have, since it apparently has some degree of free will?

Armed Forces Journal and Science could hardly be more different in ethos, but they are united in promulgating a view of robots that is false and dangerous—and in large part the unintended legacy of Asimov and his school. In a guest editorial in Science, for example, Canadian science-fiction author Robert J. Sawyer writes:

As we make robots more intelligent and autonomous, and eventually endow them with the independent capability to kill people, surely we need to consider how to govern their behavior and how much freedom to accord them—so-called roboethics. Science fiction dealt with this prospect decades ago; governments are wrestling with it today. … Again, science fiction may be our guide as we sort out what laws, if any, to impose on robots and as we explore whether biological and artificial beings can share this world as equals.

The idea that Asimov’s three laws (what Sawyer has mainly in mind) should help governments decide matters of life and death is chilling.

The second great misconception is entwined with the first, and widely entertained by policymakers. It is that we have succeeded, or are about to succeed, in creating robots with volition and autonomy. We should be embarrassed at our presumption in supposing that we have created machines with minds and wills, but much more serious is the danger that we will sincerely come to feel that misdeeds committed by robots are the fault of the robots themselves, rather than of their programmers and users. Consider the introduction to the Armed Forces Journal issue on military uses of robots. “The next ethical minefield is where the intelligent machine, not the man, makes the kill decision,” it reads. But no existing or presently conceivable robot will ever make such a decision. Whether a computer kills a man or dispenses a candy bar, it will do so because its program, whether or not the programmer intended it, has caused it to do so. The programmer may be long gone; it may be impossible even to identify him; the action he has inadvertently caused the robot to take may horrify him—but if anyone is responsible, it will be either the programmer or the commander, who despite knowing that robots have no common sense, dispatches one to deal with a situation for which common sense is required. In no case will responsibility rest with the robot.

Our society, in both peace and war, regularly sets in motion processes that are so complex that it is rarely possible to attach blame or credit for their outcomes to any one person. We recognize this when we refer off-handedly to the “law of unintended consequences.” The designer of a robotic system might put any number of layers of processing between the system’s initial detection of a situation and the actions that it will finally perform as a result. If the designer puts in enough layers, it may become impossible to foresee just how the system will deal with a particular scenario. This uncertainty may cause observers—and sometimes, amazingly, even the designer—to think that the system is deciding the issue. They are wrong. The system will likely execute a sequence of programmed steps much faster than anyone can follow, but that does not mean it is exercising judgment, for it has none. Yes, it may seem so, just as that series of still photographs, projected at the right speed, will convince our eyes that they’re seeing continuous motion. We will undoubtedly continue to personify robots and other computerized systems much as we personify everything. It’s a harmless human habit, so long as we don’t forget the underlying reality.

The “should a man or a robot decide?” debate, then, is wrongly framed. The only correct question is whether the person making the decision will be the designer-programmer of the robot or an operator working with the robot at the moment of its use. The advantage of having the designer-programmer make the decision is that it will be made by someone working calmly and deliberately, with advice and input from others, and with time for testing, revising, and debugging. The algorithm programmed into the system will likely be the best available. The disadvantage is that if the designer-programmer has overlooked some critical factor, the robot may do something disastrous.

The advantage of having users make the decision is that, at least in principle, they will take into account the context in which the robot is being applied and make whatever changes may be necessary to adapt the robot to the situation. The disadvantage is that users will be acting on the spur of the moment, perhaps in panic mode, and without the benefit of anyone else’s advice.

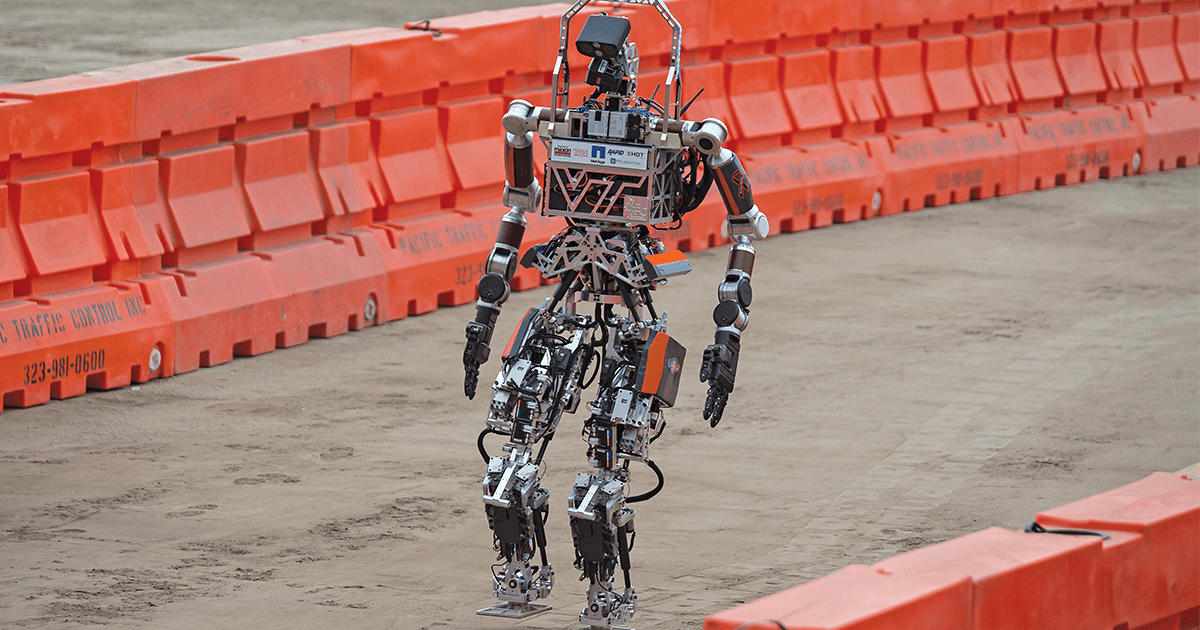

A prototype of a humanoid first responder is demonstrated at a U.S. Defense Department robotics event in California in 2015. (U.S. Navy photo by John F. Williams)

Which is the right way to go? It depends on the situation. Some systems will be so complex that in the heat of battle, the user will be unable to correct an oversight made by a designer-programmer. An antiballistic missile defense system is an example of a computer-based system whose complexity is such that no user can be expected to make last-minute improvements to it at the moment of use; for better or worse, whatever decisions designers built into the system is what we will have to live—or die—with.

At the other end of the spectrum of possibilities, an unmanned aerial vehicle targeting a terrorist leader with a missile is an example of a system that the user should be enabled to control up to the last second, as the decisions to be made are dependent on unforeseeable details. Most robotic applications, I think, would fall at some intermediate point along this spectrum, and we must think carefully to arrive at an optimum balance. But nothing of value can be accomplished if we get the question wrong. As Francis Bacon noted, “Truth emerges more readily from error than from confusion.” And so we must think through the best way to handle each type of situation, knowing that our robots will do exactly what we have told them to do (not necessarily what we want them to do), and leave the dreams of thoughtful, loving, and above all, autonomous robots to Asimov and other writers of fiction.

PART IV: “Machine learning,” the new AI

Since 2015, there has been a resurgence of excitement over AI, and not only in programming circles. Industrial and military organizations throughout the world are all trying to take advantage of a new technical development in programming that bears a variety of names—“neural networks” and “deep learning” among them—but is founded on the idea that giving computers enough data and a very general goal (e.g., “Learn to identify the subject of these pictures”) can enable them to produce useful information for us without our telling them exactly how to do it.

A computer can recognize images of things of interest—cats, say—without being told how to recognize them. This is done by (1) having the computer scan thousands of pictures of cats of many different kinds, in many different contexts; (2) having it extract common features from these pictures and saving them as a tentative solution; and (3) feeding the computer another batch of pictures, some of cats and some of other things, and testing whether it can distinguish the cat pictures from the noncat pictures. So far, the computer can do this with significantly greater success than chance would account for. Law enforcement and military officials hope such technology might be able to find terrorists in pictures of crowds, or defects in manufactured articles, or targets in photographs of terrain.

The programs that do this are modeled roughly on our knowledge of the structure of the human brain as multiple layers of interconnected neurons, although the importance of this analogy is unclear. The programmer begins by assigning neutral default weights to each layer of neurons in the program. When tested, if layers recognize cats better than chance would have it, or better than previous results, they get “rewarded”—their weight is increased. If they fail to do so, they are “punished” by having their weight decreased. It is hoped that this process, like evolution by natural selection, will produce an ever-improving cat-recognition program. Success to date has caused a great deal of excitement and investment of resources.

What such a program is looking for when deciding whether a given picture shows a cat, we don’t know: it could be that that program has noted that in all samples given it of cat pictures, a certain pixel in the upper right corner of each image was “on,” and that’s what it looks for rather than anything truly feline. At present the program’s idea of the significant feature, whatever it is, is unknown, and that’s where the champions of AI find their opening: maybe in learning to recognize cats without being told what to look for, the “computer is thinking!” But unfortunately for the enthusiast, some investigators are already trying to determine just what it is that the new AI programs are doing in their internal levels. When they succeed, we will be back in the situation so lamented by Pollack: that of seeing an AI “breakthrough” or “major advance” as just more software, which is what it will be.

The intellectual incoherence of believing that computers can think or make independent decisions is self-evident, but for those unconcerned with such incoherence, there are practical dangers that may be more impressive.

On April 25, 1984, in a hearing before a Senate subcommittee on arms control, a heated dispute broke out between several senators, particularly Paul E. Tsongas (D-Mass.) and Joseph R. Biden Jr. (D-Del.), and some officials of President Reagan’s administration, particularly Robert S. Cooper, the director of the Defense Advanced Research Projects Agency (DARPA), and the president’s science adviser, George Keyworth, about reliance on computers for taking retaliatory action in case of a nuclear attack on this country.

A new program called the Strategic Defense Initiative, better known as “Star Wars,” was studying the feasibility of placing into Earth orbit a system of laser weapons that would disable Soviet intercontinental missiles during their “boost” phase, thereby preventing them from deploying their warheads. But the system’s effectiveness, the administration officials told the subcommittee, would be predicated on its ability to strike so quickly that it might be necessary for the president to cede his decision-making powers to a computer.

According to the Associated Press, members of the subcommittee were outraged by the idea. “Perhaps we should run R2-D2 for President in the 1990s,” Tsongas said. “At least he’d be on line all the time. … Has anyone told the President that he’s out of the decision-making process?”

“I certainly haven’t,” Keyworth responded.

Biden worried that a computer error might even initiate a nuclear war by provoking the Soviets to launch missiles. “Let’s assume the President himself were to make a mistake …” he said.

“Why?” interrupted Cooper. “We might have the technology so he couldn’t make a mistake.”

“OK,” said Biden. “You’ve convinced me. You’ve convinced me that I don’t want you running this program.”

The crucial point here is that the two parties, although diametrically opposed on the issue of whether computer involvement in a missile-launching system was a good thing, agreed that such involvement might be described as “letting the computer decide” whether missiles were to be launched, in contrast to “letting the President decide.” Neither party seemed to realize that the real issue was the utterly different one of how the president’s decision was to be implemented, not whether it was his or the computer’s decision that was to prevail; that what is really in question is whether the president, in the event of nuclear attack, will seek to issue orders by word of mouth, generated spontaneously and on the spur of the moment, or by activating a computer-based system into which he has previously had his orders programmed. The director of DARPA accepted as readily as did the senators that the introduction of the computer into the system would be the introduction of an independent intelligence that might override the judgment of the duly elected political authorities; he differed only in considering that such insubordination might be a desirable thing.

These political leaders were conducting their debate, and formulating national policy, on the basis of this nonsensical assumption because hundreds of journalists and popularizers of science have succeeded in convincing too many of the computer laity that computers think, or almost think, or are about to think, or can even think better than humans can. And this notion has been encouraged and exploited by many computer scientists who know, or should know, better. Many apparently feel that if the general public has a wildly exaggerated idea of what computer scientists have accomplished in this direction, so much the better for their chances when funds are disbursed and authority granted. The point at which AI ideology affects important real-world matters has, then, already been passed. Even 36 years ago, it was corrupting a debate among decision-making parties on one of the most urgent issues of the day. The intervening years do not offer encouragement about this state of affairs, should one of those senators soon become the president himself.