Saving the Self in the Age of the Selfie

We must learn to humanize digital life as actively as we’ve digitized human life—here’s how

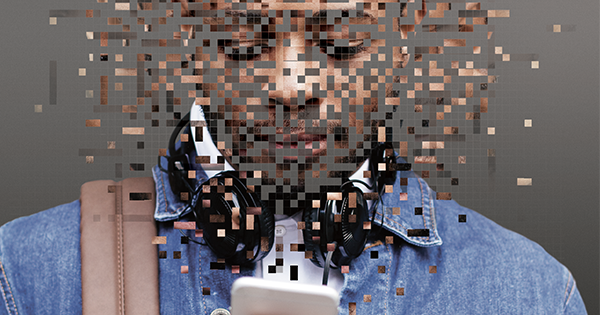

In 2012, Paul Miller, a 26-year-old journalist and former writer for The Verge, began to worry about the quality of his thinking. His ability to read difficult studies or to follow intricate arguments demanding sustained attention was lagging. He found himself easily distracted and, worse, irritable about it. His longtime touchstone—his smartphone—was starting to annoy him, making him feel insecure and anxious rather than grounded in the ideas that formerly had nourished him. “If I lost my phone,” he said, he’d feel “like I could never catch up.” He realized that his online habits weren’t helping him to work, much less to multitask. He was just switching his attention all over the place and, in the process, becoming a bit unhinged.

Subtler discoveries ensued. As he continued to analyze his behavior, Miller noticed that he was applying the language of nature to digital phenomena. He would refer, for example, to his “RSS feed landscape.” More troubling was how his observations were materializing not as full thoughts but as brief Tweets—he was thinking in word counts. When he realized he was spending 95 percent of his waking hours connected to digital media in a world where he “had never known anything different,” he proposed to his editor a series of articles that turned out to be intriguing and prescriptive. What would it be like to disconnect for a year? His editor bought the pitch, and Miller, who lives in New York, pulled the plug.

For the first several months, the world unfolded as if in slow motion. He experienced “a tangible change in my ability to be more in the moment,” recalling how “fewer distractions now flowed through my brain.” The Internet, he said, “teaches you to expect instant gratification, which makes it hard to be a good human being.” Disconnected, he found a more patient and reflective self, one more willing to linger over complexities that he once clicked away from. “I had a longer attention span, I was better able to handle complex reading, I did not need instant gratification, and,” he added somewhat incongruously, “I noticed more smells.” The “endless loops that distract you from the moment you are in,” he explained, diminished as he became “a more reflective writer.” It was an encouraging start.

But if Miller became more present-minded, nobody else around him did. “People felt uncomfortable talking to me because they knew I wasn’t doing anything else,” he said. Communication without gadgets proved to be a foreign concept in his peer world. Friends and colleagues—some of whom thought he might have died—misunderstood or failed to appreciate Miller’s experiment. Plus, given that he had effectively consigned himself to offline communications, all they had to do to avoid him was to stay online. None of this behavior was overtly hostile, all of it was passive, but it was still a social burden reminding Miller that his identity didn’t thrive in a vacuum. His quality of life eventually suffered.

Miller recalled the low point of this period of social isolation. He was walking to the subway one evening with several friends. When they reached the platform, his companions did as he once would have done: they whipped out their smartphones and went into other worlds. Feeling awkward, he stood on the platform, looked into his empty hand, and simulated using a smartphone. “I now called it my dumb phone,” he said. When the offline year ended, he was relieved.

RECLAIMING CONTROL

Whether it’s to find information, entertainment, or social engagement, we reflexively seek to be wired—sometimes obsessively, usually uncritically, always expectantly—into other venues. But for all the seemingly infinite benefits of connectedness, our intensifying screen time is stunting our attention spans. The creeping inability to stay focused in the digital age is buttressed by a wealth of scientific research (e.g., “Human Attention Span Shortens to 8 Seconds”) and endless anecdotes from mindful individuals no longer able to read a real book or have a face-to-face conversation without “phubbing”—glancing at the phone while talking to someone. Naturally, some digital evangelicals insist that everything is fine, that digital multitasking is a good thing, honing our brains to solve highly fragmented 21st-century problems. Nonetheless, we’re hearing new stories about attention-related worries every day (November 28, 2015, in The New York Times: “I opened a book and found myself reading the same paragraph over and over …” ) because, alas, they’re real.

The underlying concern with the Internet is not whether it will fragment our attention spans or mold our minds to the bit-work of modernity. In the end, it will likely do both. The deeper question is what can be done when we realize that we want some control over the exchange between our brains and the Web, that we want to protect our deeper sense of self from digital media’s dominance over modern life. Miller is something of an anomaly; most people don’t fret over the quality of their thought. But he’s also typical, because like an increasing number of Internet users, he knows that the digitized life is beginning to alienate us from ourselves.

What we do about it may turn out to answer one of this century’s biggest questions. A list of user-friendly behavioral tips—a Poor Richard’s Almanack for achieving digital virtue—would be nice. But this problem eludes easy prescription. The essence of our dilemma, one that weighs especially heavily on Generation Xers and millennials, is that the digital world disarms our ability to oppose it while luring us with assurances of convenience. It’s critical not only that we identify this process but also that we fully understand how digital media co-opt our sense of self while inhibiting our ability to reclaim it. Only when we grasp the inner dynamics of this paradox can we be sure that the Paul Millers of the world—or others who want to preserve their identity in the digital age—can form technological relationships in which the individual determines the use of digital media rather than the other way around.

CYBERNETIC TYRANNY

Meanwhile, the other way around prevails. Consider Erica, a full-time college student. The first thing she does when she wakes up in the morning is reach for her smartphone. She checks texts that came in while she slept. Then she scans Facebook, Snapchat, Tumblr, Instagram, and Twitter to see “what everybody else is doing.” At breakfast, she opens her laptop and goes to Spotify and her various email accounts. Once she gets to campus, Erica confronts more screen time: PowerPoints and online assignments, academic content to which she dutifully attends (she’s an A student). Throughout the day, she checks in with social media roughly every 10 minutes, even during class. “It’s a little overwhelming,” she says, “but you don’t want to feel left out.”

[adblock-right-01]

We’ve been worried about this type of situation for thousands of years. Socrates, for one, fretted that the written word would compromise our ability to retell stories. Such a radical shift in communication, he argued in Phaedrus, would favor cheap symbols over actual memories, ease of conveyance over inner depth. Philosophers have pondered the effect of information technology on human identity ever since. But perhaps the most trenchant modern expression of Socrates’ nascent technophobia comes from the 20th-century German philosopher Martin Heidegger, whose essays on the subject—notably “The Question Concerning Technology” (1954)—established a framework for scrutinizing our present situation.

Heidegger’s take on technology was dire. He believed that it constricted our view of the world by reducing all experience to the raw material of its operation. To prevent “an oblivion of being,” Heidegger urged us to seek solace in nontechnological space. He never offered prescriptive examples of exactly how to do this, but as the scholar Howard Eiland explains, it required seeing the commonplace as alien, or finding “an essential strangeness in … familiarity.” Easier said than done. Hindering the effort in Heidegger’s time was the fact that technology was already, as the contemporary political philosopher Mark Blitz puts it, “an event to which we belong.” In this view, one that certainly befits today’s digital communication, technology infuses real-world experience the way water mixes with water, making it nearly impossible to separate the human and technological perspectives, to find weirdness in the familiar. Such a blending means that, according to Blitz, technology’s domination “makes us forget our understanding of ourselves.”

The only hope for preserving a non-technological haven—and it was and remains a distant hope—was to cultivate what Heidegger called “nearness.” Nearness is a mental island on which we can stand and affirm that the phenomena we experience both embody and transcend technology. Consider it a privileged ontological stance, a way of knowing the world through a special kind of wisdom or point of view. Heidegger’s implicit hope was that the human ability to draw a distinction between technological and nontechnological perception would release us from “the stultified compulsion to push on blindly with technology.”

Of course, Heidegger didn’t know any software engineers. “It is impossible,” writes Jaron Lanier, author of You Are Not a Gadget and the recognized father of virtual reality, “to work with information technology without also engaging in social engineering.” Those responsible for “the designs of the moment,” he warns, “pull us into life patterns that gradually degrade the ways in which each of us exists as an individual.” When our lives become “defined by software,” we become “entrapped in someone else’s recent careless thoughts.” Zadie Smith, the novelist, applied this idea to Facebook and concluded “everything in it is reduced to the size of its founder. … A Mark Zuckerberg production indeed!” Although the thoughts underscoring digital designs may be careless of our interests, they are not random. They generally reward commerce over creativity, collective data over individual expression, and most notably, numbness over nearness. In these ways, they lay the basis for what Lanier calls “cybernetic totalism.”

If thinkers from Plato to Heidegger to Lanier have highlighted the threat of an overweaning technology, it’s people like Paul Miller and Erica—digital natives born into a digital world—who have to live with it more fully. The most thoughtful analyses of our digital mentality—beyond Lanier’s book there are Nicholas Carr’s The Shallows, Sven Birkerts’s Changing the Subject, Sherry Turkle’s Reclaiming Conversation, and William Powers’s Hamlet’s BlackBerry—all portray younger generations (and the rest of us, too, to a large extent) as technologically stupefied, often without knowing it. Collectively, these works suggest that if we haven’t already crossed the Rubicon into digitized submission, leaving behind a hollowed-out generation of human gadgets, then we’re well on our way. If this sounds histrionic, visit a college campus, attend a public lecture, go to a gym, ride public transportation, or look around the next time you’re stopped at a traffic light and you’ll realize that, alas, they’re right. And that raises a critical question:

What’s the digital machine’s secret?

THE ALLURE OF EVER PRESENCE

When I examine my own relationship with digital technology, I like to think I can walk away from it without much fuss. My analog past—I took a typewriter to college—has stayed with me as a frayed lifeline to the digital present. But for Generation Xers and millennials, there is far less analog past. And even for many older users, much of that past is long forgotten. No lifeline exists, no stable point of reference, no accessible alternative. Only the digitized moment remains. And that moment has seduced us with a particularly compelling promise: to make us present and ever present at once; to be attentive here and there at the same time. Such a universal human desire is, in itself, irresistible. But when the tool aiming to fulfill this promise also fits in our hand and responds to our thumbs, then it requires heroic effort to escape the alluring verisimilitude of ever presence.

But catch your breath for a second and it hits you—the idea is ridiculous. A genuine self can’t be in two places, much less five or six, at one time—at least not in any meaningful way. One indication that we’re becoming aware of this limitation is suggested in the collective emotional status of today’s heavy users: they’re a wreck. As The Chronicle of Higher Education recently reported, college students—almost all of whom are wired to the hilt—are among the most anxious adults in human history. “Tech anxiety abounds,” writes Alexis Madrigal in The Atlantic. Even Tech Times highlights the pervasive “pressure and anxiety of being constantly available on social media.” This unfortunate news is everywhere.

As my conversation with Erica confirms, though, anxiety has an upside, one that suggests how we might face our digital predicament. A low hum of digital anxiety was certainly evident when Erica and I talked. She wanted to be fully present in the conversation. To a large extent, she was. As the State Department might put it, we had a thoughtful discussion covering a range of weighty issues. But with her phone face-up on the table between us, the technological means to be elsewhere—to have a voice here and there at once—was two inches from her hand. After telling me how satisfying she found riding her bike to campus—“When I’m riding my bike, I’m forced to pay attention to the world around me”—Erica then completed her thought by doing something that embodied the digital anxieties of ever presence. She reached for her phone, paused, caught herself, and then yanked her hand back, grimacing as if she’d just touched a hot iron.

It was a telling maneuver. We smiled. The challenge we were discussing in the abstract was now manifested right under our noses. Yes, we agreed, it’s hard not to look at that thing! Yes, we also agreed, we feel techno-guilt because we understand that deep down, the reach for distraction disrupts something important—in this case, a conversation. Our residual sensibility is the silver lining to our digital dilemma. Erica’s admonishment, inspired from within, represents the opportunity to recover a sense of identity in a digital culture because it confirms our awareness not only that we’re ceding ourselves to false digital promises, but also that we’re not at all happy about the behavior that results. We don’t want to be enslaved to a device. We want to regain a measure of control. Doing so will require something you might not expect—courting exactly the experience that digital life promises to end: stress.

THE SELF UNDER STRESS

If there’s a single factor that enables us to resist the dominant paradigm—technological

or otherwise—it’s a strongly anchored identity. But such an identity is what digital life threatens. To achieve nearness, to understand why Miller ended up alone when he went analog, we must appreciate the finer details of how digital gadgetry erodes our sense of self. Digital life, with its emphasis on convenience, speed, anonymity, interchangeability, control, pleasure, and entertainment, promises to ease us through the day. Easing us through the day sounds perfectly splendid. But the price of admission for such convenience is a compromised self. Digital technology, by removing (or at least promising to remove) a certain mode of stress from our lives, simultaneously weakens the weapons we’d otherwise use to resist its dominance. Stress, in this sense, is like oxygen for the identity. Insofar as digital technology sucks it from the room, we are left too depleted to notice, much less care enough to fight back.

By stress, I don’t mean anything dramatic or exceptional. Commonplace activities qualify. Experiences such as being bored, arguing with a friend, falling in love, resisting temptation, flouting convention, learning a craft, breaking up with a partner, playing an instrument, giving a speech, reading Faulkner, memorizing a poem—these everyday analog endeavors are invaluable because they force us to confront discomfort. When we face these challenges, we might fail, fight back, become frustrated, succeed, lose our temper, calm down, feel confidence, overeat, undereat, go running, suffer insecurity, and so on. Through these experiences, in an honest attempt to face real challenges, we ultimately develop an anchored, adult identity capable of standing up to our digital demons.

Daily life offers endless opportunities to cultivate character-building behaviors that, once they become habitual, can nurture our weapons of resistance rather than exchange them for the conveniences of the Internet. Four of these habits stand out as essential to the preservation of an anchored identity: spending time alone, engaging in meaningful conversations, forming friendships, and pursuing an activity within a community. Imagine, if you can, an identity that’s permitted to develop with minimal interference from digital culture and you’ll begin to grasp the benefits that these four kinds of stresses can have on a self hoping to develop a healthier relationship with digitized life.

BEING AND NOT BEING ALONE

We begin in isolation. Being alone, though uncomfortable, allows us to reflect on what we love and fear. We experience love and fear from birth, but by adolescence we finally have the cognitive wherewithal to consider why we are thrilled by classical guitar but repulsed by snakes, and this awareness enables us to imprint our private preferences on our innermost self. If we can tolerate being alone, without distraction, we will hear the whisperings of our consciousness telling us that we’re the only person on earth who can answer the crucial question, “What’s it like to be you?” Nothing, I’d suggest, confirms the value of isolation better than this question.

But isolation can only be temporary. Time spent alone with your thoughts and feelings is a preamble to another stressful experience: conversation. Contrary to the expectations of today’s tapping tribe of texters, conversation when done right is more than the functional exchange of content. As Sherry Turkle, whose Reclaiming Conversation is subtitled The Power of Talk in the Digital Age, writes, real talk is something we invest in to receive a “payoff in self-knowledge, empathy, and the experience of community.” It demands that we introduce a fragile self into social space, throw the verbal dice, and hope for connection. No matter how challenging, conversation helps people “discover what they have hidden from themselves.” In this respect, it requires us to court stress by forcing us to be patient with the ambiguity and awkwardness of an unscripted exchange. (If you don’t think conversation is stressful, think of how many seemingly innocuous ones you try to avoid.)

This tension between self and society—between time alone and time with others—leads us to the third element of identity protection: friendship. From casual relationships to romantically intimate ones, the defining commonality is the risk of revealing more than what you’d ever dare post on Facebook. It’s about providing privileged access to the inner self. In the simplest terms, friendship derives from one’s willingness to risk self-disclosure and the other’s willingness to reciprocate. The result can culminate in the catastrophe of rejection. But if all goes well, if reciprocation ensues, the basis is laid for what Bennett Helm, in Love, Friendship, and the Self, believes is “what makes us be persons.”

One of the most interesting recent discoveries about companionship is that it thrives best in defined social contexts—a sports team, a religious community, a fraternity, a band, a club, a gang, a cult, any group where others can confirm your special commitment. This brings us to the final element of identity protection—participation in a group activity. In The World Beyond Your Head: On Becoming an Individual in an Age of Distraction, Matthew B. Crawford notes that the Western obsession with individualism has fostered an “inattentional blindness” to the “shared world.” This unthinking myopia has engendered a form of solipsism whereby the self believes that it somehow accounts, sui generis, for its own revelations. Such exceeding faith in one’s tender bloom of selfhood erases all awareness that, in Crawford’s words, “we rightly owe to one another a certain level of attentiveness and ethical care.” Instead, it’s all about you.

As an antidote to such navel gazing, Crawford suggests involvement in a skilled endeavor, ideally one pursued collaboratively with fellow aficionados. Such a social arrangement requires the individual to teach and be taught, to lead and be led, to humble and be humbled, all the while reducing the individual to the larger imperatives of tradition and expertise. In this way, relationships—born of time alone and nurtured by conversation—are granted the depth they deserve. A fully formed self can thereby protect itself, in the realm of nearness, by dictating how it will engage the digital world, rather than submit to its imperial designs.

DIGITAL DISARMAMENT

The four habits described above are essential to building a complete self. They provide the raw experiences that make you you. When fully formed, they protect us as individuals from the digital onslaught because they usher us toward what William Powers in Hamlet’s BlackBerry calls the “satisfying immersion” into our “inner selves.” It’s the kind of immersion that Paul Miller celebrated before his experiment went dark. But the sneakiest quality of digital life is that while promising to enhance the habits that inform identity, it guts them of their strength, leaving us unknowingly vulnerable to the allure of digital media. It’s an insidious process. Digital technology vows to improve the quality of our lives by making everything faster and easier even as it purloins that quality, leaving behind anxious users twitching for little more than the chance to indulge the next new thing.

How this disarmament happens is complicated, but it starts with boredom. Much of modern life is dull. David Foster Wallace understood and conveyed the nature of this boredom with poignancy. In a famous Kenyon College commencement address, he recalled standing in a long line at the grocery store after a hellish day at work: “The store is hideously, fluorescently-lit, and infused with soul-killing Muzak or corporate pop, and it’s pretty much the last place you want to be.” Wallace insisted, in his novel The Pale King, that learning to deal with such situations leads to a meaningful life. The goal is “to find the other side of the rote, the picayune, the meaningless, the repetitive, the pointlessly complex. To be, in a word, unborable.”

The miraculous smartphone—which arrived in earnest after Wallace’s death in 2008—seems to have accomplished the admirable goal of making its owner unborable. Who could ever be alone and bored with this Holy Grail of a device providing constant connection between here and there? Plus, when its charming distractions wash our brains in dopamine with every ding, how can we possibly resist it while facing the tedium of a grocery store line? Grabbing the smartphone has for good reason become the ultimate default move against boredom.

But it’s not really dealing with anything. Being alone doesn’t count as being alone if the boredom underscoring the experience is outsourced to an app—that is, if the stress of the experience is erased. The essential fact about being alone and bored is that the moment becomes yours when you engage it through the idiosyncrasies of your own consciousness. When you own it. Uncomfortable as it is, being alone must therefore be a radically self-absorbed endeavor because only in the quiet space between tedium and distraction can we make lasting choices about who we are, what we believe, who we want to become, what we want to support, and—most relevant for our purposes—what we want to resist. Again, David Foster Wallace:

If you’ve really learned how to think, how to pay attention, then you will know you have other options. It will actually be within your power to experience a crowded, loud, slow, consumer-hell-type situation as not only meaningful but sacred, on fire with the same force that lit the stars—compassion, love, the sub-surface unity of all things. Not that that mystical stuff’s necessarily true: the only thing that’s capital-T True is that you get to decide how you’re going to try to see it. You get to consciously decide what has meaning and what doesn’t. You get to decide what to worship.

Each time we allow social media to make for us that critical choice of what to worship, we consign our identity to software. We eschew the power of what the French philosopher Gaston Bachelard called “my secret of being in the world” for the cheap comfort of a Twitter scroll. Even as digital distraction promises to alleviate boredom, it removes from the existential equation the founding prerequisite for identity development—the individual, alone, facing nothing. When the smartphone transports our consciousness elsewhere, which it does every time we pick it up to avoid the stress of isolation, our most private choices suddenly hew not to the undiscovered ambitions of a curious mind, but to the commercial designs of a data-driven cloud.

The consequences of this distraction reverberate beyond the lone individual clutching an $800 antidote to boredom. A self that’s reluctant to descend alone into the rabbit hole of boredom is a self that, in Turkle’s assessment, allows virtual connection to replace conversation as the basis of all social relations. Champions of digital technology have long praised the extensive labyrinth of online communication. The Internet and its satellite devices do generate unprecedented connectivity, but critics who analyze our interactions within that network are far less sanguine about the discussions that ensue. Digital technology might foster effusive outreach, but it fails to produce the stress that otherwise animates actual conversation. Texting or chatting online, or even exchanging emails, enables users to avoid the edgy ambiguity of a face-to-face exchange. Some conversations become, as one interviewee told Turkle, “cleaner, calmer, and more considered” when carried out in digital space. Others—such as a breakup with a romantic partner—can be avoided altogether. Indeed, millennials often terminate a relationship by simply ceasing to text. In either case, the heat of the moment—which is when the emotions animating a conversation emerge—is dissipated by digital distance.

[adblock-left-01]

When you’re self-editing a text or email, you control the message. When you’re firing away face-to-face, you don’t. Anything can happen. The realization that what might come out of your mouth might surprise even you—ditto for what your partner sends your way—reminds us that there’s more magic in a conversation than anything happening between two well-curated Facebook pages.

It’s not hard to see how the demise of conversation denigrates relationships. A tactile conversation requires that we empathize in order to test the waters of friendship. But empathy withers when the self turns its back on conversation. Digital natives in particular have embraced online ersatz friendships as the genuine article. But they do so with no appreciation of who’s orchestrating those relationships or what effect they might have on their empathy. As Jaron Lanier reminds us, the engineers who set the mold for online interactions have nothing invested in the relationships that follow. He notes, “When we [technologists] deploy a computer model of something like learning or friendship that has an effect on real lives, we are relying on faith.” Millennials appear to accept without question the legitimacy of virtual relationships forged in digital space. But Lanier asks them: “Can you tell how far you’ve let your sense of personhood degrade in order to make the illusion work for you?”

The final identity-shaping endeavor that digital substitution tarnishes is participation in a community pursuing shared excellence. Adherents of online communities (especially advocates of online education) assert that virtual meetings democratically broaden access to community experiences. They open the gates to all who want to enter. This rhetoric can be inspiring, but it overlooks how online communities insulate participants from critical aspects of otherwise healthy communities, aspects such as hierarchies of expertise and the chance to learn in shared physical space, under the actual gaze of peers who can directly experience the fullness of your expressions.

Nothing highlights the crucial difference between real and artificial communities more than the seedy nature of dialogue in online forums. Rhetorical nastiness festers online not because people are natural trolls, but because the community, as it were, is displaced from the human context. The individual, participating in the virtual community from the position of “me,” cannot judge his relative position in a shifting hierarchy of talent. Instead of assessing his weaknesses or sharing his strengths, the loner at the laptop sits back, lashes out, and exchanges what Crawford calls “the earned independence of judgment” for what too often becomes an unearned rant. The vitriol that flows so fluidly in virtual forums originates in a digitized culture that, by seducing us into its realm, enables us to bypass elemental human interactions that would never have left Paul Miller standing on the subway platform, alongside so-called friends, staring into his bare hand.

RECOVERY

In the face of digital disarmament, if not because of it, the human-digital relationship is edging toward what some technologists call “the fourth industrial revolution.” This transformation, according to Klaus Schwab, leader of the World Economic Forum, promises to collapse “the physical, digital, and biological worlds” into one. Wall Street and Silicon Valley are thrilled about it. But for anyone concerned with the fate of human autonomy in the digital age, this prediction, even if overstated, is sobering. As the revolution looms, we must both understand the mechanics of digital disarmament and recover our ability to confront it. This is not to suggest that we should aim to abolish digital media or disconnect completely—not at all. Instead, we must learn to humanize digital life as actively as we’ve digitized human life.

No one solution can restore equity to the human-digital relationship. Still, whatever means we pursue must be readily available (and cheap) and offer the convenience of information, entertainment, and social engagement while promoting identity-building experiences that anchor the self in society. Plato might not have approved, but the tool that’s best suited to achieve these goals today is an object so simple that I can almost feel the eye-rolls coming in response to such a nostalgic fix for a modern dilemma: the book. Saving the self in the age of the selfie may require nothing more or less complicated than recovering the lost art of serious reading.

What other activity so deftly balances undistracted isolation and engaged social interaction? In one respect, reading first requires the self, stripped bare to one’s consciousness in the face of another’s narrative, to confront a text. (Kafka called reading “an axe for the frozen sea within us.”) In another respect, reading pulls us out of ourselves into various communities of thought, venues in which empathy and interpretation converge at a single point of reference. Anyone who has ever shared enthusiasm for a book understands Emerson’s definition of friendship as a phenomenon where you and another person “see the same truth.” And even if the interpretations that come from isolated inquiry end up clashing, George Eliot’s statement that reading is “a mode of amplifying experience and extending our contact with our fellow men” still holds true. For those who feel increasingly helpless in the face of digital tyranny, the book may not be the ultimate weapon of the weak, but it’s as effective and accessible a point of departure as I can imagine.

Not everyone agrees. Some literary critics have already turned out the lights on literature. In a 1988 essay called “The End of Bookishness?” George Steiner wondered whether our 500-year-old habit hadn’t reached its endpoint, finally deprived of “the economics of space and of leisure on which a certain kind of ‘classical reading’ hinges.” That said, several hopeful signs suggest that reading—like slow food and vinyl records—is making a comeback. Of the dozens of people I spoke to about online habits, only one—a 71-year-old man, no less—had cleared his bookshelves to embrace what he called “the digitized information revolution.”

The rest continue to hold special reverence for reading, most of them wishing they did more of it. Many young people are actively seeking a formal time-out from digital culture to foster a literary frame of mind. Chelsea Rustrum, co-author of It’s a Shareable Life, told me that millennials, “feeling really disconnected from each other and not knowing why,” are attending “Digital Detox retreats” to prepare their minds for more contemplative endeavors. The precious few millennials who already do read as if life depended on it have an opportunity—yes, because of the Internet—to parlay their literary passion into criticism relevant for a generation unburdened by an inherited canon. Jonathan Russell Clark, a 30-year-old staff writer for Lit Hub and a widely published book critic, told me that his most important objective as a young critic was to reveal his own love of reading books as a way to inspire new readers. “The onus is on us,” he said, referring to the responsibility of a younger generation of critics to spark a redemptive interest in serious reading.

There’s no telling how these trends will play out. But as the fog of digital life descends, making us increasingly stressed out and unempathetic, solipsistic yet globally connected, and seeking solutions in the crucible of our own angst, it’s worth reiterating what reading does for the searching self. A physical book, which liberates us from pop-up ads and the temptation to click into oblivion when the prose gets dull, represents everything that an identity requires to discover Heidegger’s nearness amid digital tyranny. It offers immersion into inner experience, engagement in impassioned discussion, humility within a larger community, and the affirmation of an ineluctable quest to experience the consciousness of fellow humans. In this way, books can save us.